We let data do the heavy lifting

A curated platform focusing on accessible and actionable data, analysis, and more.

Motivation

Data-driven knowledge sharing will be an important driver for future development, environmentally, economically as well as culturally. We believe the development of knowledge platforms represents a new era of interaction between technology and humans.

We foresee that the value of easily accessible deep knowledge will be applicable on all levels of organisations and businesses, between organisations; even between governments and international entities.

Of course, machine learning and artificial intelligence will be integrated into these platforms. The technology will be able to activate knowledge, timely, dynamically and transparently and thereby facilitate deeper insight, higher innovation levels and better decision-making.

Use of models

The benefit of a knowledge platform is the simultaneous handling of a multidimensional and multiscale information space. Once the data are organised in accordance with the platform protocol they will be available for broadly applicable models of various kinds.

These models, as they develop, might be of crucial importance for very different purposes such as science, the mining industry and farming, as well as banks and insurance companies and NGOs and policy makers.

Big data, ML and AI

The outcome of predictive ML- or AI models depends very much on the volume of input data. Building global models requires relevant data to describe local and regional variations. Likewise data for learning, testing and validating purposes has to be voluminous and rich.

The requirement to data volume for these types of models is often underestimated when only local or regional data is applied.

Technology

Knowledge platforms can be structured differently depending on the application. When geographic relationships are deemed important, data has traditionally been arranged in a spatial database. However, a new data structure will in most cases be required to accommodate very detailed local data in the same database as regional or even global data.

The idea of a discrete global grid system (DGGG) to hold large volume of different data in the same hierarchic reference system was developed more the 25 years ago, but price of needed hardware has until now limited the use of DGGS’s for data management.

Progress made in browser applications and platform technology as well as decreasing hardware costs have now made DGGS an increasingly attractive solution for organising big data.

Our development

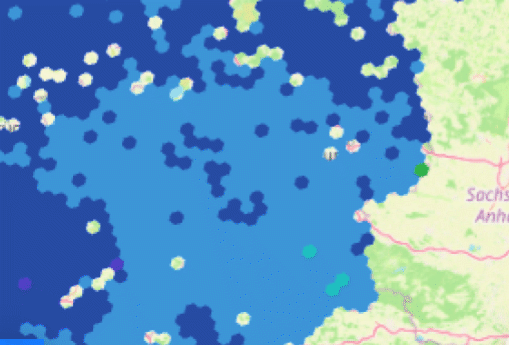

At the “FEM venue 2023” we are presenting our β-version of an integrated DGGS platform and frontend data viewer. The nature of our work is that it is never ending, but we are now able to demonstrate how capable our platform is to handle a large amount of geoscience data and how they are made available in real time.

We have integrated machine learning procedures in the import functionality which allow very detailed information to be maintained and retrieved.

Our frontend is a data-viewer rather than a map viewer. When the data cells are arranged on a map surface, they might appear somewhat similar to traditional maps, but each hexagon on the map surface is a result of pre-processing procedures and its value is a result of numerous underlaying data.

Predictive models

We are now capable of developing predictive models. More data will be uploaded in the near future will make this model increasingly reliable.

The principles of our new attention/transformer model is presented for the first time here at the FEM.

Hex-Responder basic

Non-exclusive partnership on Scandinavian Highlands’ (SH) data platform – access to interact with SH global learning base as well as provided open customer data.

Cooperation on model development (model-training, test and validations etc.) and access to SHH’s data and model interface, Hex-Responder.

Hex-Responder plus

Non-exclusive licence purchase of the platform architecture with integrated front-end, set-up platform, access to customised trained model and services.

No access to the SHH global learning database and SHH experts do not have access to costumer data.

Requires a minimum 1 year of basic partnership.